Augmented Reality Pokémon Battles

~Now over 500,000+ views on social media!~

From the ground up, I designed and built 9 custom Pokémon cards, added augmented reality functionality, designed touch interactions, and programmed a fully realized battle system, complete with stats, sound effects, and moves.

To see a complete project history and progress, I continue to release update videos here:

www.tiktok.com/ @artradingcards

Lets break it down…

Nine Custom Made Cards

I handmade nine Pokémon cards with the intent to make them more easily trackable by typical augmented reality image tracking software.

Each has their own signature moves inscribed on the card, along with a Pokédex entry.

Built on Image Tracking

The whole project’s foundation is built on the image and plane tracking capabilities of ARKit. Apple’s AR kit uses their most recent computer vision algorithms to be able to track multiple images and detected flat planes through the iPhone camera.

Using that information, I can use the iPhone camera to track each card, and then display the Pokémon on the corresponding card.

Fully Animated Models

Just like the games, the models on my cards are fully animated. Each Pokémon has a unique spawn animation and touch interaction animation.

Dynamic Shadows

I created what I call “Dynamic shadows” for the cards. When the cards are not near a surface, the shadow from the Pokémon is constrained to the card.

But when a surface is detected, the shadow expands to its full shape, and projects onto the real world surface. This works with multiple surfaces, and the cards seamlessly transition between the two states.

Oh, and did I mention this is all using the real world lighting color and direction detected by the iPhone camera?

And to top it off… Battling

The cards can go into a “Battle Mode”. When two Pokémon enter a battle they face each other, no matter the orientation.

The battles feature music, sound effects, four unique moves per Pokémon, and functions similar to the Pokémon video game battles. This includes supporting misses, critical hits, and move type multipliers.

Expect your classic Pokémon battle, switching between your turn and your opponent’s, each using a move, until one Pokémon faints.

Augmented Reality in the Great Outdoors

~1st Place Project Winner in the University of Texas at Austin Fall 2019 Engineering Capstone Fair~

We built a 100% portable augmented reality headset that tracks your real time GPS location and head orientation down to the centimeter. Without the need for special gloves or a game controller, the headset tracks your hands and allows the user to manipulate virtual objects projected onto the physical world. And, we liked it so much we built a second headset and designed a multiplayer experience. Watch the video to experience what we created.

How did we do it?

Open Source

The headset design we used is part of a open source project by a company called LeapMotion, now known as UltraLeap. UltraLeap wanted to lower the price bar for getting involved with augmented reality, and released the 3D models needed to fully construct a headset that is compatible with their controller-free hand tracker, the Leap Motion Controller.

To view the Project North Star on Github you can visit:

To learn more about Project North Star you can read the announcement post:

To learn more about UltraLeap and their sensors, you can visit:

Controller Free Hand Tracker

No controller, no joysticks, no gloves… the leap motion controller can track both of your hands through computer vision. The controller will track your hands in the real world, and then move matching virtual hand models inside a graphics engine.

3D Printed Material

We 3D printed almost all of the hardware for the headset using the open source models that LeapMotion created. We made some custom changes to the models to allow for integration with our special GPS antennas.

GPS Integration

The antennas that we implemented with each headset is what allowed us to achieve the centimeter-accurate positioning that powered our headsets. The antennas allowed us to track the real time location and orientation of both headsets, a measurement that we then translated into a graphics engine. This technology was the result of a collaboration with the University of Texas Radionavigation Lab. This translation is best understood by the demonstration in the video at the top of the page.

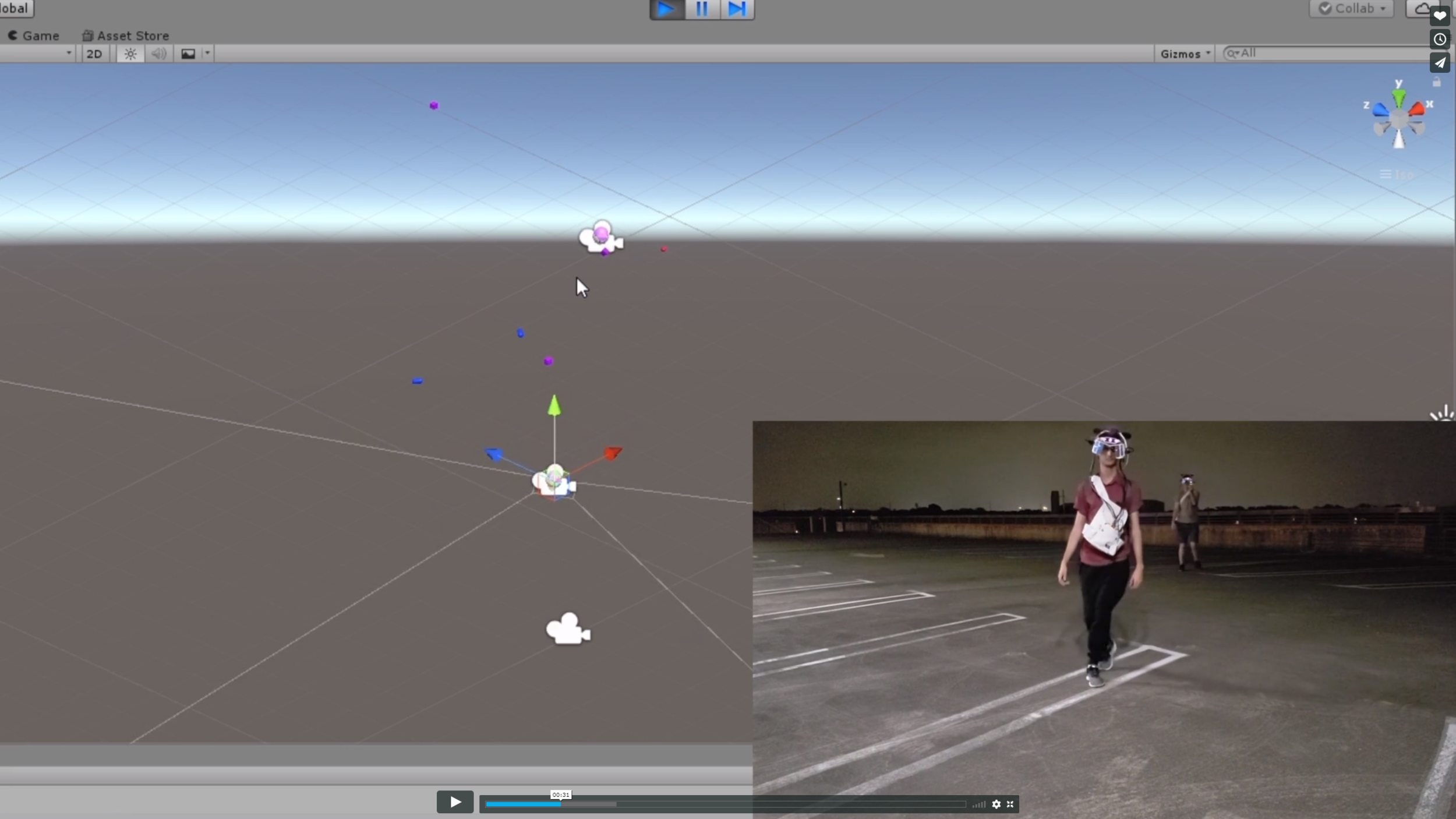

Unity

And it all comes together in Unity, the graphics engine we used. All the inputs from the real world are fed into Unity, which is where the virtual objects live. The virtual objects are then projects into the user’s field of view from a pair of LCD screens onto a pair of lenses. In the picture, you can see how the wearer’s real time orientation matches the camera orientation in Unity.

Udoo x86

What was running the Unity executable? An Udoo x86 embedded system mini PC, or what can briefly be seen in my hands in this picture. We were able to install and run windows on this board, which was then able to power the Unity executable. Because we could power this board from a portable battery, this allowed our headsets to not be reliant on being hooked up to a full size computer, and to be tether free. For information on the Udoo board, you can checkout: https://www.udoo.org/

The Final Product

In the end, we created a game where the headset wearer could create, resize, and destroy virtual objects that would appear in front of them, projected onto the real world. We made two headsets, and an object placed by one wearer could be picked up and manipulated by the second wearer. We wanted to create an immersive experience that proved how GPS could be integrated with augmented reality.

IoT Bike Anti -Theft Water Bottle

~2nd Place winner in the Spring 2018 Embedded System Design Class Competition~

We gathered an Arduino, a few extra hardware pieces, the software services of ThingSpeak, and threw it all together in a water bottle to create a prototype for a discrete anti-theft device that would counter the bike theft problem that plagues Austin, Texas. The best part? The water bottle will send out a tweet whenever it senses movement.

The Inspiration

The city of Austin, Texas faces a serious problem with bike theft. In 2017, over a eight month time period, there were 785 reported bike theft cases reported to the Austin Police Department, and that doesn’t account for the cases reported to the University of Texas Police Department, or the thefts that go unreported. When my partner and I were tasked with creating an embedded system project, we decided to create a prototype that could solve the real world issue of bike theft, a problem that we, as bike owning university students, had experienced first hand.

It started with an Arduino

We decided the hardware we would need is an Arduino, an accelerator/gyroscope, and an ESP8266, a chip that allows the Arduino to connect to wifi. When we were putting everything together, we wanted to device to be discrete, so we decided to house all the hardware in a plastic water bottle. Our goal was to create a system that could react to movement.

And then it evolved to Twitter

Along the way, we had the thought… what if this IoT device had a presence on social media? We used the power of ThingSpeak, an IoT service website similar to ITTT, and set up a system so that when the accelerator sensed movement above the set movement threshold, the Arduino would send a signal to ThingSpeak. ThingSpeak would interpret this signal to make a tweet on the Bike’s Twitter account with a personalized message, alerting the world that it is being stolen. In the end, we had created a prototype for a marketable IoT device.